Data Center Tiers, and why they matter?

According to Wikipedia‘s definition, a data center is a repository that houses computing facilities like servers, routers, switches, and firewalls, as well as supporting components like backup equipment, fire suppression facilities, and air conditioning. A data center may be complex (dedicated building) or simple (an area or room that houses only a few servers). Additionally, a data center may be private or shared.

Data centers house a network’s most critical systems and are vital to the continuity of daily operations. Consequentially, the security and reliability of data centers and their information is a top priority for organizations.

Although data center designs are unique, they can generally be classified as internet-facing or enterprise (or “internal”) data centers. Internet-facing data centers usually support relatively few applications, are typically browser-based and have many users, typically unknown. In contrast, enterprise data centers service fewer users but host more applications that vary from off-the-shelf to custom applications.

Today’s data center networks must better adapt to and accommodate business-critical application workloads. Also, data centers will have to increasingly adapt to virtualized workloads and to the ongoing enterprise transition to private and hybrid clouds.

What is the Tier System?

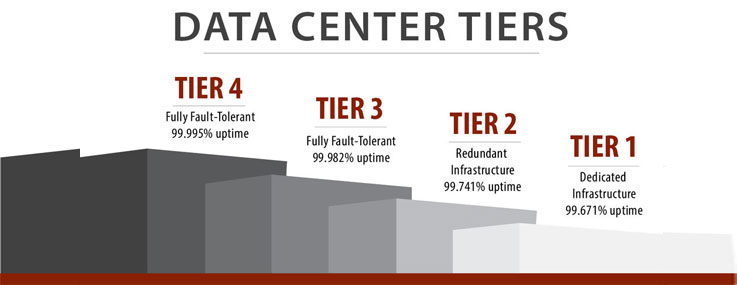

The Tier Classification System refers to a benchmarking system from Uptime Institute to determine the availability, or uptime, of a data center. The number of factors determines what tier a data center falls under, including power, cooling, and ancillary data center systems. Each higher Tier delivers more uptime, data center performance, and requires more investment. Data centers range from Tier I to Tier IV, with each tier incorporating the lower tier’s requirements. Each tier is progressive, with Tier I being the simplest and Tier IV being the most resilient. Tier III is a common commercial solution for colocation and wholesale data center service providers. Tier IV data centers are designed for risk-averse businesses with mission-critical applications.

• Tier I Basic Capacity data centers have a single path of power using an uninterruptible power supply (UPS) to handle short outages, dedicated cooling systems, and engine generators for extended outages. A Tier I data center must have its own dedicated space, as well as, a dedicated site infrastructure for IT support outside of the office. Tier I data centers offer no redundancy.

• Tier II Redundant Component data centers, like Tier I data centers, they have a single path for power and cooling distribution and add redundancy, using equipment such as UPS modules, chillers or pumps, and engine generators which protect it from interruptions in IT processes.

• Tier III Concurrently Maintainable data centers have redundant components and multiple distribution paths to allow for no shut downs for maintenance, repair or replacement of equipment. Tier III data centers have active power and cooling distribution paths with dual corded IT equipment.

• Tier IV Fault Tolerant data centers offer multiple power and cooling distribution paths with the autonomous response to failure. Tier IV data centers are self-healing in the case of faults, are compartmentalized to limit impacts of a single major fault and have Continuous Cooling for the transition from utility power to engine generators. Like Tier III data centers, IT equipment is dual corded, so that in the event of equipment failure, IT operations would not be interrupted.

Data Center Security

Most secure data centers require a special environment to operate, such as a data center room or otherwise defined perimeters to provide access only to authorized personnel. They are a high-risk environment using large-scale electricity powers and robust equipment. Since data centers are often educational, research or commercial entities, their malfunctioning can threaten sensitive personal or expensive commercial data, jeopardize user privacy and harm vulnerable environments.

Data centers must provide a secure, resilient and monitored environment for setting special IT equipment capable to host large data. Data confidentiality can be easily controlled via electronic access systems that assure the physical security restrictions and enable role-based authorization.

An electronic lock with fobs distributed to responsible IT staff enables automated manipulation of the physical impediment, as well as record monitoring and audit control. Most secure data centers make sure that they have several security levels organized by staff authorization responsibilities or assigned by clients. The IT equipment should be physically protected from environmental threats and power failures. Data center security can include specialized cards for the main door access and tokens or cards to enable individual staff access.

Energy saving

Worldwide Data Centers use huge amounts of energy and if that energy is derived from fossil fuels, they become dangerous environmental pollutants. Governments are becoming aware of that problem, so green data centers pilot programs are launched.

In 2015, China launched a green data center pilot program. That same year, Alibaba, one of China’s largest cloud computing providers, inaugurated a data center near Hangzhou, using solar energy and hydraulic power. It also relies on lake water to cool its servers.

In the United States, data centers have significantly reduced their carbon footprint. All of Apple’s data centers are powered by clean energy, according to the company, and its new center in China will also use some renewable energy.

Microsoft and Amazon are also aiming to power their data centers by 100% renewable energy. Microsoft president Brad Smith said that the company is expected to pass the 70% mark by 2023. Amazon Web Services (the company’s data centers arm) says it has exceeded 50% renewable energy usage for 2018.

Prospects

Good planning is always a virtue, and enterprises and service providers can navigate a prudent course by ensuring that their critical applications and services, including increasingly virtualized and even containerized workloads, are accommodated by sufficient bandwidth in their data center networks.

As enterprises and service providers deploy new applications and extend existing applications, they will confront the bandwidth limitations of their current data center network infrastructure. Fortunately, specifications and standards have been proposed and are in place to address these requirements. For many companies, the need for greater bandwidth is already acute, and for others, it is just a matter of time.

Additional Sources: HowStuffWorks, HPE